Understanding Encoder-Decoder Mechanisms (P3). Applications of Encoder-Decoder Mechanisms

Section 8: Applications of Encoder-Decoder Models

Encoder-decoder models have found diverse and impactful applications across various domains of artificial intelligence. Their ability to handle complex mappings between input and output sequences makes them versatile tools for solving a wide range of tasks. In this section, we explore some prominent applications of encoder-decoder models and how they have revolutionized the field of artificial intelligence.

8.1 Machine Translation

Machine translation is one of the earliest and most well-known applications of encoder-decoder models. These models have played a pivotal role in breaking down language barriers and enabling seamless communication across different languages. By using encoder-decoder architectures, companies like Google, Microsoft, and others have built powerful translation systems that can accurately convert text from one language to another.

Example: Google Translate utilizes an encoder-decoder model to translate text between various languages. Users input text in one language, and the encoder processes the input, creating a context representation. The decoder then generates the translated text in the desired target language.

8.2 Text Summarization

Text summarization is another crucial application of encoder-decoder models. In scenarios where there is a vast amount of textual data, such as news articles, research papers, and legal documents, encoder-decoder models can automatically generate concise and coherent summaries, extracting the most salient information.

Example: News Aggregation Platforms use encoder-decoder models to summarize news articles automatically. The model reads the article and produces a short summary that captures the key points, enabling users to quickly grasp the content without reading the entire article.

8.3 Image Captioning

Encoder-decoder models have also been successfully applied to image captioning tasks. In image captioning, the encoder processes the image to extract visual features, and the decoder generates descriptive captions based on these features.

Example: Social Media Platforms leverage encoder-decoder models to automatically generate captions for user-uploaded images. The model analyses the visual content and generates relevant and engaging captions that describe the image’s content.

8.4 Speech Recognition

Encoder-decoder models have shown promising results in automatic speech recognition (ASR) tasks. ASR systems convert spoken language into written text, and encoder-decoder architectures play a vital role in this conversion.

Example: Voice Assistants like Siri and Alexa employ encoder-decoder models to understand and transcribe users’ speech into text. The model processes the audio input and converts it into a textual representation, allowing the virtual assistant to respond accurately.

8.5 Sentiment Analysis

Sentiment analysis, also known as opinion mining, involves determining the sentiment or emotion expressed in a piece of text. Encoder-decoder models have been applied to sentiment analysis tasks, enabling the understanding and classification of sentiments as positive, negative, or neutral.

Example: Social Media Monitoring tools use encoder-decoder models to analyse users’ posts and comments and identify the sentiment behind them. This information is valuable for companies to gauge public opinion about their products or services.

8.6 Conversational Agents and Chatbots

Encoder-decoder models have significantly contributed to the development of conversational agents and chatbots. These models enable chatbots to understand user queries, generate relevant responses, and maintain context throughout the conversation.

Example: Customer Support Chatbots in online services utilize encoder-decoder models to interact with customers and provide them with relevant information or solutions to their queries.

Section 9: The Future of Encoder-Decoder Models

The future of encoder-decoder models is a captivating journey that holds tremendous potential for reshaping the landscape of artificial intelligence. As researchers and practitioners continue to explore and refine these architectures, we can expect to witness even more sophisticated and efficient models in the coming years.

In this section, we delve into the potential directions that encoder-decoder models may take in the future and the impact they could have on various applications.

9.1 Enhanced Performance and Efficiency: Scaling New Heights

A primary focus of future research in encoder-decoder models will be on enhancing their performance and efficiency. With the ongoing advancements in hardware and computational resources, researchers will be able to design larger and more complex models, capable of handling increasingly massive datasets.

These larger models will empower encoder-decoder architectures to capture even more nuanced details and intricate relationships in the input data, leading to improved performance across a wide range of natural language processing tasks. Moreover, breakthroughs in model compression and optimization techniques may make these larger models more feasible for real-world applications, making state-of-the-art AI accessible to a broader audience.

9.2 Multimodal Encoder-Decoder Models: A Symphony of Senses

The future heralds a convergence of sensory inputs, giving rise to a new era of multimodal encoder-decoder models. While current encoder-decoder models predominantly focus on text-based tasks, such as machine translation and text summarization, the next generation of models will break these barriers and embrace multiple modalities, including images, speech, and more.

Imagine a world where a single encoder-decoder model can effortlessly interpret a spoken language, visualize and understand images, and generate text-based responses. For instance, a smart educational assistant could understand a student’s verbal query, analyse a diagram the student draws, and provide a detailed and contextually relevant explanation.

9.3 Few-Shot and Zero-Shot Learning: Adapting with Agility

Empowering encoder-decoder models with the ability to learn from limited labeled data (few-shot learning) or perform tasks they have never encountered before (zero-shot learning) is a paramount objective for future research.

Consider a scenario where a medical AI system can accurately diagnose a rare disease with only a few labeled patient cases or a language translation model capable of seamlessly translating between language pairs it has never seen during training. Such capabilities could revolutionize various domains, where data scarcity has been a significant bottleneck for AI applications.

9.4 Explainable and Interpretable Models: Building Trust and Understanding

As encoder-decoder models grow in complexity and become more sophisticated, there is an increasing demand for developing techniques to make these models more explainable and interpretable. Understanding the decision-making processes of these models is essential for building trust and ensuring ethical use in critical applications, such as healthcare and autonomous vehicles.

Researchers may explore innovative methods to visualize the attention mechanisms and intermediate representations of encoder-decoder models. Such transparency will enable users and stakeholders to gain insights into the model’s reasoning process, understand its predictions, and facilitate human-machine collaborations with confidence.

9.5 Human-AI Collaborations: The Rise of Creative Synergy

Future encoder-decoder models could pave the way for unprecedented collaborations between humans and AI. In creative industries like art, music, and writing, these models can serve as creative partners, providing inspiration and enhancing human creativity.

Imagine a novel written collaboratively between an author and a language model, with the model suggesting plot twists and character development in real-time. Or an AI-generated musical composition collaborating with a human musician to create harmonious melodies that push the boundaries of artistic expression.

Encoder-Decoder Mechanisms in Real-Life

Section 10: Encoder-Decoder Models in Practice: Real-Life Applications

Encoder-decoder models have found a multitude of real-life applications across diverse domains, revolutionizing the way we interact with technology and enabling breakthroughs in artificial intelligence. In this section, we explore some of the prominent use cases where encoder-decoder models have made a significant impact.

10.1 Machine Translation: Breaking Language Barriers

One of the earliest and most well-known applications of encoder-decoder models is machine translation. These models have transformed the way we communicate across languages, breaking down language barriers and fostering global communication.

For instance, the Google Neural Machine Translation (GNMT) system, based on encoder-decoder architecture, has dramatically improved the quality and accuracy of translations between different languages. These models learn to encode the source language into a fixed-length vector and then decode it into the target language, generating coherent and contextually relevant translations.

10.2 Text Summarization: Distilling Information

Encoder-decoder models have proven invaluable in the field of text summarization, where they can distill lengthy documents or articles into concise and informative summaries. By encoding the input text into a meaningful representation and then decoding it into a summary, these models can effectively capture the essential information from the source text.

In news applications, for instance, encoder-decoder models can automatically generate concise summaries of news articles, providing readers with quick and informative snapshots of current events.

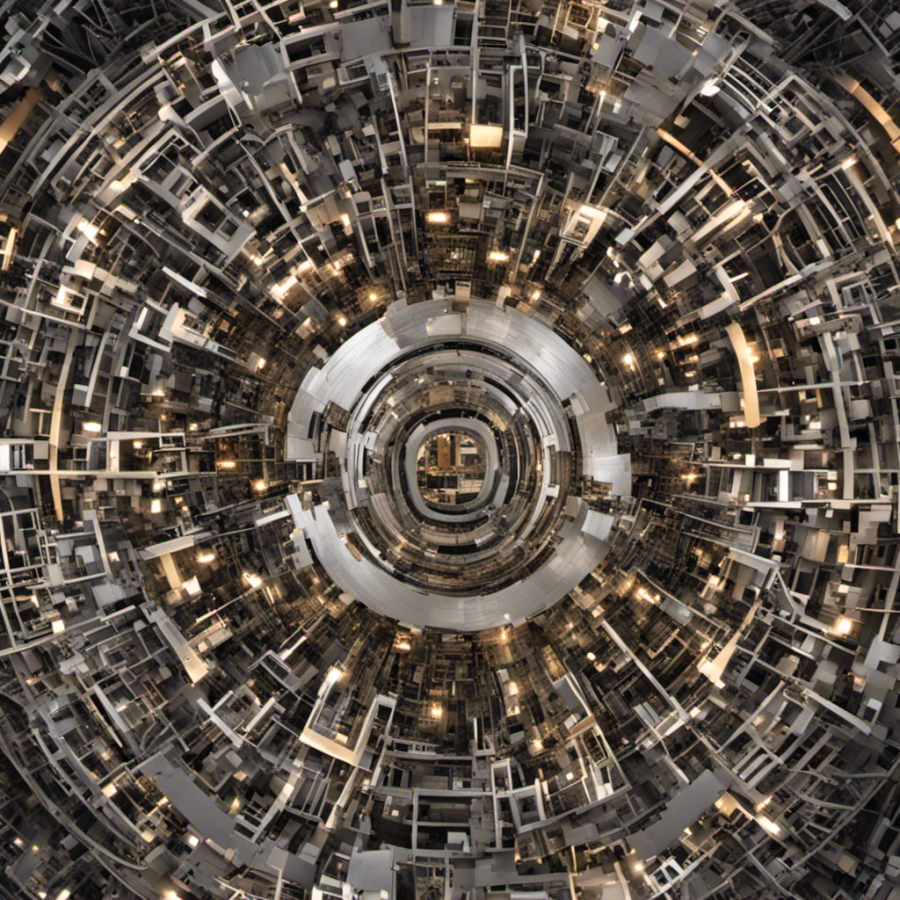

10.3 Image Captioning: Bridging Vision and Language

The marriage of computer vision and natural language processing has given rise to the fascinating application of image captioning, where encoder-decoder models generate textual descriptions for visual content.

For example, a multimodal encoder-decoder model can analyse an image, encode it into a fixed-length vector, and then decode it into a descriptive caption, effectively bridging the gap between vision and language. This capability finds practical use in applications like assistive technologies for the visually impaired and enhancing search engine capabilities.

10.4 Speech Recognition: Transforming Voice to Text

Encoder-decoder models have played a crucial role in advancing automatic speech recognition (ASR) systems. These models can convert spoken language into written text, enabling a wide range of voice-activated applications and improving accessibility for voice-controlled devices.

In virtual assistants like Siri and Alexa, encoder-decoder models decode the audio input from users into meaningful text, enabling the systems to comprehend and respond to spoken commands and queries accurately.

10.5 Conversational AI: Human-Like Interactions

Encoder-decoder models have revolutionized conversational AI systems, making interactions with virtual agents and chatbots more natural and human-like. By leveraging their ability to generate coherent and contextually relevant responses, these models enhance the conversational experience for users.

For example, chatbots powered by encoder-decoder models can hold engaging and contextually aware conversations, assisting users in various tasks like customer support, information retrieval, and even therapeutic interventions.

10.6 Question Answering: Delivering Knowledge

Encoder-decoder models have proven highly effective in question answering tasks, where they can comprehend complex questions and generate accurate and informative responses.

For instance, in a medical setting, these models can provide detailed answers to medical queries, serving as valuable resources for healthcare professionals and patients seeking reliable information.

10.7 Creative Content Generation: Augmenting Creativity

Beyond functional applications, encoder-decoder models have opened new avenues for creative content generation. From creative writing to art and music, these models have demonstrated the capacity to collaborate with human creators and augment their creativity.

Artists can partner with encoder-decoder models to co-create paintings, generating novel and imaginative pieces. Similarly, writers can collaborate with language models to craft captivating stories, pushing the boundaries of narrative imagination.

Distilling Information; Ethical Considerations; Conclusion

Section 11: Encoder-Decoder Models and Ethical Considerations

As encoder-decoder models continue to advance and find widespread applications, addressing the ethical implications becomes paramount to ensure responsible AI development and deployment. This section explores the ethical challenges posed by encoder-decoder models and delves into potential solutions to foster fairness, transparency, and accountability in their use.

11.1 Bias and Fairness: Unveiling Hidden Prejudices

Encoder-decoder models, like other AI systems, are vulnerable to inheriting biases present in their training data. Biases can emerge from societal prejudices or historical imbalances, leading to unfair or discriminatory outcomes when deployed in real-world scenarios.

To mitigate bias, researchers and developers must adopt rigorous data collection and preprocessing practices. Careful curation of diverse and representative datasets can help reduce bias and ensure that the model learns from a comprehensive range of perspectives. Moreover, employing fairness-aware training techniques and evaluation metrics can aid in identifying and rectifying biased outputs during model development.

11.2 Privacy and Data Protection: Safeguarding Sensitive Information

Encoder-decoder models often require large datasets for training, potentially involving sensitive personal or proprietary information. Ensuring privacy and data protection is crucial to maintain user trust and comply with legal and regulatory requirements.

Implementing privacy-preserving techniques, such as data anonymization and encryption, can safeguard sensitive data during model training and inference. Additionally, organizations must adhere to stringent data protection guidelines and obtain explicit user consent for data collection and processing.

11.3 Transparency and Explainability: Decoding the Black Box

The inherent complexity of encoder-decoder models can render them opaque and difficult to interpret, raising concerns about transparency and explainability. This lack of transparency can hinder understanding, leading to scepticism and distrust of AI systems.

Enhancing model transparency requires the development of interpretable model architectures and the integration of explainability techniques. Attention visualization, saliency maps, and feature attribution methods can provide insights into how the model arrives at specific predictions, increasing trust and facilitating accountability.

11.4 Misinformation and Deepfakes: Tackling the Dark Side

Encoder-decoder models’ proficiency in generating coherent and contextually relevant text can be exploited to create sophisticated misinformation and deepfake content. This poses significant challenges in combatting the spread of fake news and disinformation.

To address this issue, content moderation systems must be augmented with advanced AI tools to detect and filter out misinformation generated by encoder-decoder models. Additionally, research efforts should focus on developing robust deepfake detection algorithms to identify and mitigate malicious use.

11.5 Human Supervision and Responsibility: The Guiding Hand

Encoder-decoder models are powerful tools but must be guided by human supervision and responsibility. While they can achieve impressive results, they should not operate as autonomous decision-makers.

Human oversight is essential throughout the AI development lifecycle, from data collection and model training to deployment. Establishing clear guidelines and boundaries for model use, along with human-in-the-loop mechanisms, can help ensure ethical and accountable AI practices.

11.6 Dual-Use Dilemma: Balancing Innovation and Responsibility

Encoder-decoder models, like many AI technologies, present a dual-use dilemma, where they can serve both beneficial and harmful purposes. While they offer numerous positive applications, they can also be misused for malicious intent, such as generating deepfake content for misinformation campaigns.

To address this challenge, collaboration among policymakers, researchers, and industry stakeholders is vital. Developing responsible guidelines and frameworks for the ethical deployment of encoder-decoder models can strike a balance between innovation and responsible use, mitigating potential risks.

Conclusion

The journey of encoder-decoder models in artificial intelligence has been one of continuous innovation and advancement. From their humble beginnings as part of the original transformer architecture to the development of sophisticated encoder-only and decoder-only variants, these models have revolutionized the field of AI and natural language processing.

Throughout this comprehensive exploration, we have witnessed how encoders and decoders work in tandem to transform one sequence into another, making them invaluable for tasks such as machine translation, text summarization, and sentiment analysis. The advent of the transformer model marked a significant milestone, introducing self-attention mechanisms that allowed models to process data in parallel, leading to faster and more efficient AI systems capable of capturing long-term dependencies.

The Power of Encoder-Decoder Hybrids

While encoder-only and decoder-only models have demonstrated remarkable capabilities in their respective domains, encoder-decoder hybrids combine the best of both worlds, leveraging the strengths of both components to handle complex natural language processing tasks. Models like BARD (Bidirectional and Autoregressive Decoders) have emerged as powerful solutions capable of understanding and generating text effectively, paving the way for more versatile and contextually-aware AI systems.

Ethical Considerations in AI

As encoder-decoder models become increasingly pervasive, it is essential to address the ethical implications surrounding their development and use. Bias and fairness, privacy and data protection, transparency and explainability, misinformation and deepfakes, human supervision, and the dual-use dilemma are critical ethical challenges that demand responsible AI practices.

Researchers, developers, policymakers, and industry stakeholders must collaborate to establish guidelines and frameworks that prioritize fairness, transparency, and human oversight in AI development and deployment. By proactively addressing these ethical considerations, we can build AI systems that benefit society while upholding principles of fairness, accountability, and respect for privacy.

The Future of Encoder-Decoder Models

As the field of AI continues to evolve, the future of encoder-decoder models holds immense promise. Advancements in hardware and algorithms will enable the development of larger and more sophisticated models, capable of handling increasingly complex tasks and datasets. Multimodal encoder-decoder models will emerge, bridging the gap between different types of data and revolutionizing applications like video captioning and speech-to-text systems.

Additionally, researchers will focus on addressing the challenges of few-shot and zero-shot learning, enabling models to learn new tasks with minimal training data. Explainability will remain a focal point, with efforts to enhance the transparency of encoder-decoder models, empowering users to understand and trust AI systems’ decisions.

Empowering Human Creativity and Collaboration

Beyond functional applications, encoder-decoder models will empower human creativity and collaboration, leading to exciting possibilities in creative content generation, art, and storytelling. Collaborative AI systems, where humans and AI work together, will leverage encoder-decoder models to augment human creativity, productivity, and problem-solving capabilities across various domains.

In Closing

The evolution of encoder-decoder models has shaped the landscape of artificial intelligence, redefining how we process and understand language. As we move forward, it is essential to navigate the ethical dimensions, adhering to principles that prioritize fairness, transparency, and human supervision. By embracing responsible AI practices, we can harness the full potential of encoder-decoder models to build a future where AI technologies enrich and empower humanity.

About The Author

Bogdan Iancu

Bogdan Iancu is a seasoned entrepreneur and strategic leader with over 25 years of experience in diverse industrial and commercial fields. His passion for AI, Machine Learning, and Generative AI is underpinned by a deep understanding of advanced calculus, enabling him to leverage these technologies to drive innovation and growth. As a Non-Executive Director, Bogdan brings a wealth of experience and a unique perspective to the boardroom, contributing to robust strategic decisions. With a proven track record of assisting clients worldwide, Bogdan is committed to harnessing the power of AI to transform businesses and create sustainable growth in the digital age.