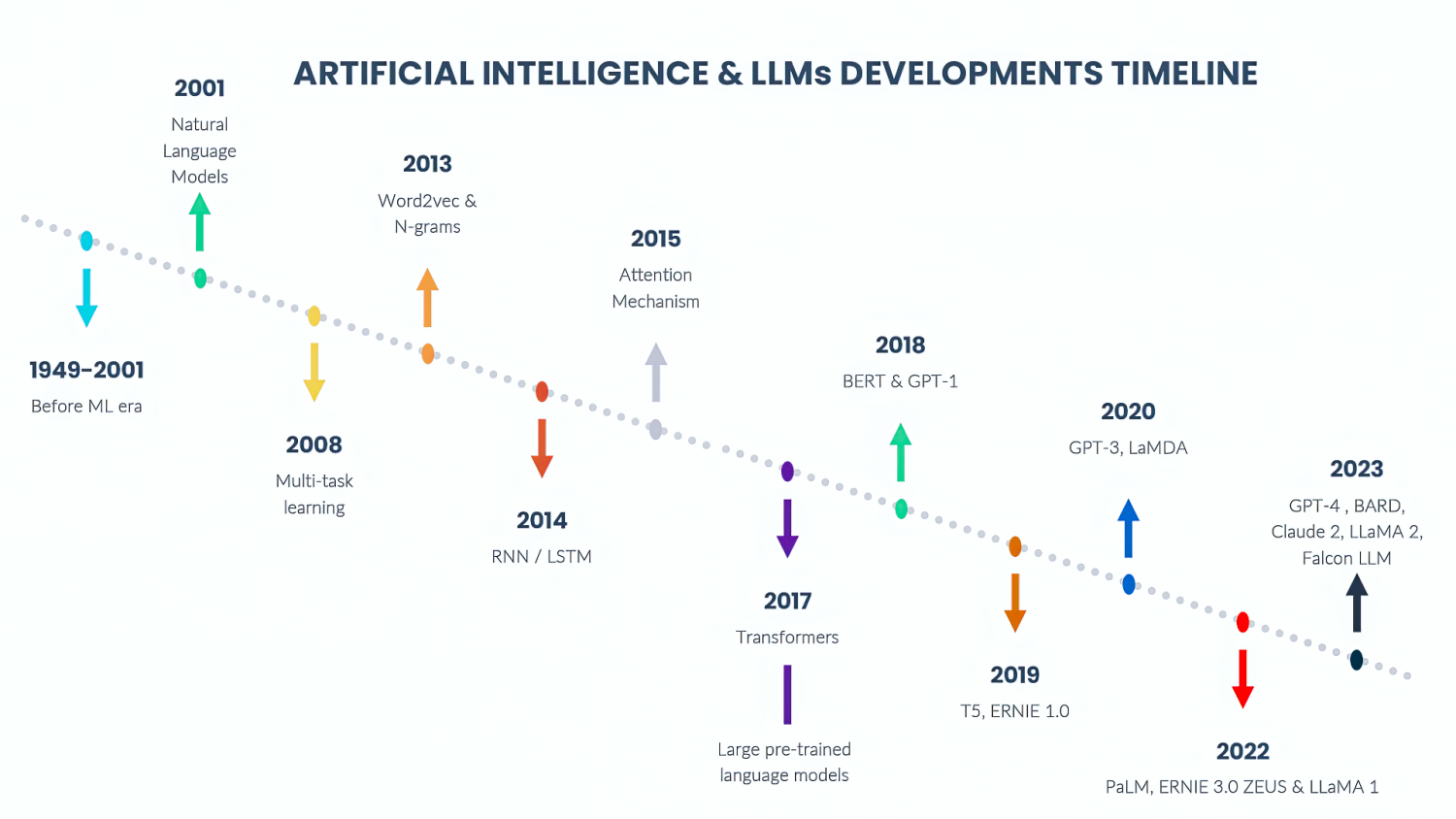

History of Machine Learning and AI development

Over the course of more than seven decades, the fields of machine learning and artificial intelligence have undergone a remarkable journey of development, transforming the way we interact with technology and expanding the boundaries of what AI can achieve. Let’s have a look at this captivating timeline, from 1949 to 2023, to understand the extraordinary evolution of AI and language processing.

In the early 2000s, Natural Language Models emerged, marking the first steps toward teaching machines how to comprehend and process human language. Then, in 2008, Multi-Task Learning entered the stage, enabling models to tackle multiple tasks simultaneously, broadening the scope of AI applications.

As we progressed to 2013, Word2Vec and N-grams brought exciting advancements, allowing machines to represent words as numerical vectors and extract meaningful patterns from text data, empowering language models with enhanced understanding.

In 2014, the stage was set for a significant breakthrough with the introduction of Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) models, enabling AI systems to retain and process sequential information, making them more context-aware and implicitly more powerful.

The year 2015 brought in a game-changer development with the Attention Mechanism, allowing models to focus on crucial parts of input data, revolutionizing machine translation and other natural language processing tasks.

In 2017, the Transformers made their debut, redefining language modeling by replacing RNNs with parallel processing, enhancing efficiency, and scalability, paving the way for Large Pre-Trained Language Models (LLMs).

Then came 2018, the year of monumental developments, with BERT and GPT-1, the pioneers of LLMs, showing exceptional abilities to grasp contextual information from vast data, setting the bar for language understanding.

In 2019, the stage was further enlivened with T5 and ERNIE 1.0, pushing the boundaries of AI’s language comprehension and demonstrating its practical potential.

2020 was a year of remarkable achievements with GPT-3 and LaMDA captivating the world, showcasing mind-boggling capabilities for generating human-like text and engaging in natural conversations.

Fast forward to 2022, and the LLM evolution continued with PaLM, ERNIE 3.0 ZEUS, and LLaMA1, surpassing all expectations and bringing AI-driven language processing to new heights.

And finally, in 2023, the stage was set for the grand finale with the introduction of GPT-4, LLaMA2, BARD, Claude2, and Falcon LLM, where AI’s prowess in interactive dialogue systems and large-scale language models reached new horizons.

Throughout this awe-inspiring timeline, the relentless pursuit of innovation and discovery by brilliant minds from around the world has propelled AI and machine learning from humble beginnings to the astonishing heights of today. As we look forward to the future, the potential for AI to continue shaping our world is boundless, promising an era of unprecedented possibilities, discoveries, and advancements.

- Word2vec: Let’s imagine Word2vec as a tool that learns the intricate web of relationships between words by studying a large body of text. This technique, which emerged in 2013, uses a neural network model to grasp word associations. At its, core Word2vec is highly capable of identifying synonymous words or suggesting words to round off a sentence. Each word is represented by a unique list of numbers, known as a vector, carefully selected to encapsulate the word’s meaning and usage. The beauty of this approach is that a simple mathematical function, cosine similarity, can reveal the semantic similarity between words based on these vectors. In other words, it has proven a practical and effective way to decode language.

- N-Gram: Picture this: you’re trying to piece together a linguistic puzzle, and each piece is an n-gram. It’s a sequence of ‘n’ items, which could be anything from phonemes, syllables, letters, words, or even base pairs, all extracted from a sample of text or speech. These n-grams are like the DNA of language, collected from a text or speech corpus, and they provide a unique snapshot of language in action. The ‘n’ in n-gram can vary, with Latin numerical prefixes like “unigram”, “bigram”, and “trigram” indicating the size. A unigram is a single item, a bigram is a sequence of two, and a trigram is a sequence of three. It’s like a sliding window moving through a sentence, capturing a specific sequence of items. In the world of computational biology, these sequences go by a different name, becoming k-mers. Regardless of whether you call them n-grams or k-mers, they’re a fascinating way to dissect and understand language, one sequence at a time. They’re the building blocks that help us analyze and predict language patterns, making them invaluable in fields like natural language processing and machine learning.

- RNNs and LSTMs: Recurrent Neural Networks (RNNs) are a class of artificial neural networks where connections between nodes form a directed graph along a temporal sequence. This allows them to use their internal state (memory) to process sequences of inputs. The core idea behind RNNs is to make use of sequential information by performing the same task for every element in a sequence, with the output being dependent on the previous computations. However, RNNs have a significant issue known as the vanishing gradient problem. As they backpropagate to learn, they multiply through all the derivatives of the weights used in the computations. If these weights are small (less than 1), the gradient decreases exponentially quickly as it propagates down, effectively “vanishing.”

- Long Short-Term Memory (LSTM) networks. LSTMs are a special kind of RNN, capable of learning long-term dependencies. They were introduced by Hochreiter & Schmidhuber in 1997 and were refined and popularized by many people in the following work. LSTMs work by introducing a new structure called a memory cell. A memory cell is composed of four main elements: (1) an input gate, (2) a neuron with a self-recurrent connection (a connection to itself), (3) a forget gate, and (4) an output gate. The self-recurrent connection has a weight of 1.0 and ensures that barring any outside interference, the state of a memory cell can remain constant from one time step to another. The magic of LSTMs comes from their ability to forget, remember, and update their cell states, thanks to the gating mechanisms. The gates in an LSTM are analog, in the form of sigmoid neural nets. They output numbers between “0” and “1”, describing how much of each component should be let through. A value of zero means “let nothing through,” while a value of one means “let everything through”. This unique architecture allows LSTMs to selectively remember or forget things, which makes them particularly good at understanding the context within sequences of data. They’re effectively capable of remembering important details while letting go of the irrelevant ones.

- Machine learning-based attention mechanism: Let’s imagine for a second the machine learning as a bustling city, and attention mechanisms as its traffic control system. These mechanisms guide the flow of information, assigning different “soft” weights to each word in a context window, much like traffic lights control the movement of vehicles at intersections. These weights aren’t static like the buildings in our city analogy; they’re dynamic, changing in real time during each interaction with a large language model. This is in stark contrast to “hard” weights, which are pre-trained, fine-tuned, and remain constant. Now, let’s think of attention mechanisms as the city’s selective spotlight, illuminating the areas (or words) that are most crucial for making a prediction, while dimming the less relevant parts. This selective focus boosts the model’s accuracy and efficiency, akin to how a well-planned city runs smoothly. In the early days, similar mechanisms were used in recursive neural networks. However, these networks processed words sequentially, not in parallel. They would consider the current word and the words within the context window to update their hidden state and make predictions, much like a city planner considering various factors before making a decision. How do they work? In the mathematical world, these attention mechanisms are represented by complex equations and models. For instance, the “soft” weights are calculated using softmax functions, which convert a vector of numbers into a vector of probabilities. These probabilities then determine the importance of each word in the context window. So, in essence, attention mechanisms in machine learning are like the traffic control system of a bustling city, guiding the flow of information to ensure the model runs smoothly and efficiently.

- Transformer Models: Let’s continue our analogies, so just picture that you’re at a party with a bunch of people, and you’re trying to listen to multiple conversations at once. Some people are louder, some are quieter, and some are more interesting than others. Your brain, being the amazing machine it is, can focus on the important bits and tune out or discard the rest. That’s kind of what a Transformer model does with data. Introduced by the Google Brain team in a paper called ‘Attention Is All You Need’ in 2017, the Transformer model is a type of deep learning architecture. It’s the cool new kid that everyone wants to hang out with because it’s faster, smarter, and more efficient than the older kids, like recurrent neural architectures such as long short-term memory (LSTM). The Transformer model uses the attention mechanism, which decides which parts of the data to focus on and which parts to ignore. This makes it great for tasks like language translation, where understanding the context and nuances of words is crucial.

Now, let’s dive into the main components of a Transformer model:

- Tokenizers: They break down the input data (like text) into smaller pieces called tokens.

- Embedding layers: They convert the tokens into a format (vectors) that the model can understand.

- Transformer layers: They process the tokens, decide which ones are important (using the attention mechanism), and make predictions based on that.

There are two types of Transformer layers: encoders and decoders. The original Transformer model used both, but later models experimented with using just one or the other.

- Encoders process the input data and pass it on to the decoders.

- Decoders take the processed data from the encoders and generate the final output.

In a nutshell, a Transformer model is like a really smart party-goer. It listens to all the conversations (data), figures out which ones are important (attention mechanism), and then makes predictions based on that. It’s a game-changer in the field of machine learning and has been used in everything from natural language processing to computer vision.

Large Language Models (LLMs). BERT | GPT-3 | PaLM | LaMDA

Large Language Models (LLMs) are the giants of the AI world, boasting billions of parameters that help them understand and generate human-like text. These models are trained using a combination of self-supervised and semi-supervised learning methods.

In self-supervised learning, the models are trained to predict parts of the input data from other parts of the same input data. For instance, given a sentence with a missing word, the model’s task is to predict the missing word based on the context provided by the rest of the sentence. This method allows the model to learn the structure and semantics of the language without needing any explicit labels.

On the other hand, semi-supervised learning is a method that combines a small amount of labeled data with a large amount of unlabeled data during training. The idea is to use the labeled data to guide the learning process and then use the model’s predictions on the unlabeled data to further refine the model. This method is particularly useful when obtaining labeled data is expensive or time-consuming.

These learning methods, combined with the power of artificial neural networks and specialized AI accelerator hardware, allow LLMs to process vast amounts of text data, most of which is scraped from the Internet. The models learn not just the syntax and semantics of language, but also the biases and inaccuracies present in the data they are trained on.

Prominent examples of LLMs include GPT-4, LLaMa, PaLM, BERT, BLOOM, Ernie 3.0 Titan, and Claude. These models, with their advanced learning methods and immense size, have revolutionized the field of natural language processing, making older, specialized models for specific linguistic tasks largely obsolete.

- BERT, or Bidirectional Encoder Representations from Transformers, is like the celebrity of the language model world. It was introduced by Google’s AI development team back in 2018 and has since become a cornerstone in natural language processing (NLP) experiments. Imagine BERT as a diligent student who absorbs knowledge from a vast library of text, then uses that knowledge to understand and generate human-like text. BERT comes in two sizes: BERTBASE and BERTLARGE. BERTBASE is like a compact car, efficient and nimble, with 12 encoders and 12 bidirectional self-attention heads, totaling 110 million parameters. On the other hand, BERTLARGE is the SUV of the family, boasting 24 encoders with 16 bidirectional self-attention heads, totaling a whopping 340 million parameters. Both models were trained on a vast amount of text data from the Toronto Book Corpus and English Wikipedia.

- Now, let’s talk about T5 or Text-to-Text Transfer Transformers. T5 is like a universal translator in the world of language models. It treats every task – be it translation, question answering, or classification – as a text-to-text problem. It’s like having a conversation with the model: you give it some text, and it responds with some other text. This approach allows for a consistent model, loss function, and hyperparameters across a diverse set of tasks. T5 added a few twists to the BERT model. It introduced a causal decoder to the bidirectional architecture, which is like giving the model a sense of time and sequence. It also replaced the fill-in-the-blank task with a mix of alternative pre-training tasks, making the model more versatile. In terms of technical details, T5 uses three main objectives: (i) language modeling (predicting the next word), (ii) BERT-style objective (masking/replacing words and predicting the original text), and (iii) deshuffling (randomly shuffling the input and trying to predict the original text). It also uses different corruption strategies and span lengths, which have been shown to be effective in training the model. In a nutshell, BERT and T5 are like the dynamic duo of language models, each with its unique strengths and capabilities, revolutionizing the field of natural language processing.

- GPT-3, also known as Generative Pretrained Transformer 3, is a state-of-the-art autoregressive language model that uses machine learning to produce human-like text. It was developed by OpenAI and introduced in June 2020. The model is built on a transformer architecture and uses attention mechanisms to focus on relevant parts of the input text. GPT-3 is the third iteration of the GPT series and is significantly larger than its predecessors. It has 175 billion machine learning parameters, compared to 1.5 billion in GPT-2. This makes GPT-3 one of the largest and most powerful language models to date. The model is capable of tasks such as translation, question-answering, and text generation without any task-specific training data, demonstrating strong zero-shot and few-shot learning capabilities. This means it can understand and respond to prompts in a way that is contextually relevant, even if it hasn’t been explicitly trained on similar prompts. In September 2020, Microsoft announced that it had obtained an exclusive license to GPT-3’s underlying code, allowing it to incorporate the technology into its products and services. However, OpenAI continues to offer access to GPT-3 through a public API, which developers can use to build applications that leverage the model’s capabilities. Despite its impressive capabilities, GPT-3 has also raised concerns about its potential misuse. For example, it could be used to generate misleading news articles, spam, or abusive content. OpenAI has acknowledged these concerns and has implemented measures to mitigate potential misuse, such as output filtering in its API and a research effort to make the model understand and respect user-set boundaries.

- PaLM, or Pathways Language Model, is a brainchild of Google AI, boasting a massive 540 billion parameters. This model was developed using a novel approach called Pathways, which allows for efficient model training across multiple TPU v4 Pods. In the case of PaLM, it was trained on a staggering 6144 TPU v4 chips. The model’s size is not its only impressive feature. PaLM also excels in a variety of tasks, from common-sense reasoning and arithmetic reasoning to joke explanation, code generation, and translation. When combined with chain-of-thought prompting, PaLM’s performance skyrockets, especially on tasks requiring multi-step reasoning. In the realm of healthcare, Google and DeepMind developed a specialized version of PaLM, known as Med-PaLM. This model was fine-tuned on medical data and has been shown to outperform previous models on medical question-answering benchmarks. Med-PaLM was the first to obtain a passing score on U.S. medical licensing questions, and in addition to answering both multiple-choice and open-ended questions accurately, it also provides reasoning and is able to evaluate its own responses. Now, let’s talk about the technical advances that led to the creation of PaLM 2. The Google team proposed that data size is as important as model size and that the two should be scaled roughly 1:1 for optimal performance. They also designed a more multilingual and diverse pretraining mixture that extends across hundreds of languages and domains. This approach allows larger models to handle disparate non-English datasets without sacrificing English-language understanding performance. The team also employed a novel tuned mixture of different pretraining objectives to train the model to understand different aspects of language. PaLM 2 also includes control tokens to enable control over toxicity at inference time and multilingual out-of-distribution “canary” token sequences that are injected into the pretraining data to provide insights on memorization across languages. In terms of performance, PaLM 2 substantially improved performance on a wide variety of tasks with faster and more efficient inference, demonstrated robust reasoning capabilities and confirmed its ability to control toxicity with no additional overhead. Google also extended PaLM using a vision transformer to create PaLM-E, a state-of-the-art vision-language model that can be used for robotic manipulation. The model can perform tasks in robotics competitively without the need for retraining or fine-tuning. In May 2023, Google announced PaLM 2 at the annual Google I/O keynote. PaLM 2 is reported to be a 340 billion parameter model trained on 3.6 trillion tokens. In June 2023, Google announced AudioPaLM for speech-to-speech translation, which uses the PaLM-2 architecture and initialization. In other words, PaLM is not just a large language model, it’s a technical marvel that pushes the boundaries of what’s possible in the field of AI.

- LaMDA, short for Language Model for Dialogue Applications, is a big family of ChatBots designed by Google. The first version of LaMDA was born from a project called Meena in 2020. Google proudly announced it at a big tech event called Google I/O in 2021, and the next version came out just a year later. In June 2022, a lot of people started talking about LaMDA because of something surprising: a Google engineer named Blake Lemoine said the chatbot had become aware of itself. Most scientists don’t believe this is true, but it’s sparked a lot of debate about whether or not a computer can really be considered “human” if it can fool us into thinking it is. Then, in February 2023, Google revealed BARD. It’s another chatty robot, but this one is supercharged by LaMDA. Google created BARD to go head-to-head with another popular chatbot developed by OpenAI, called ChatGPT.

GPT-4 | Falcon | LLaMA 2 | Claude 2| ERNIE | BLOOM

- GPT-4, short for Generative Pre-trained Transformer 4, is the next-generation super-smart, multi-skilled robot brain developed by OpenAI. It’s the fourth member of the GPT series. OpenAI introduced it to the world on March 14, 2023. People can interact with a toned-down version of GPT-4 through a chatbot called ChatGPT Plus, a premium version of the regular ChatGPT. However, to use the full-powered GPT-4, you have to join a waiting list. GPT-4 it’s trained to predict what comes next in a sentence. It does this using an enormous amount of cured information from the internet and other sources. Then, it gets even more training from humans and AI (fine-tuning the model) to make sure it’s giving responses that make sense and follow the rules. People have said that this new GPT-4 version of ChatGPT is better than the last one, although it still has some kinks to iron out. What’s cool about GPT-4 is that it can understand pictures as well as words, something the older versions couldn’t do. OpenAI hasn’t shared all the technical details about GPT-4, like how big it is.

- The Technology Innovation Institute (TII), an important global research hub and part of the Abu Dhabi Government’s Advanced Technology Research Council, has now made Falcon LLM available for everyone to use. Falcon LLM is a super-smart language model with a whopping 40 billion parameters, trained on a staggering one trillion tokens. What’s amazing about Falcon is that it doesn’t need as much computer power to train as some of the other models. It uses 75% of the power that GPT-3 needs, 40% of what Chinchilla requires, and 80% of what’s needed for PaLM-62B. Falcon was built using special tools and uses a unique system to get high-quality info from the internet for training. Unlike some of the other models, Falcon doesn’t depend on anything from NVIDIA, Microsoft, or HuggingFace. A lot of care went into making sure Falcon was trained on good-quality data, as language models can get a bit temperamental and unreliable if they learn from poor-quality info. The system they built for this could handle tens of thousands of computer cores to process information quickly and was designed to filter out and avoid duplicating low-quality content from the web. When it comes to performance, Falcon is built for speed and efficiency. Using high-quality data and a bunch of optimizations, Falcon works better than GPT-3, even though it uses less power to train. Plus, it only needs a fifth of the computer power at the time of making inferences. Falcon’s performance is on par with the best language models out there from companies like DeepMind, Google, and Anthropic. It’s a language model with 40 billion parameters that predicts what comes next in a sequence, trained on 1 trillion tokens. The training took place on 384 advanced computer graphics units on AWS over two months. The data Falcon was trained on was collected from the public parts of the internet. They cleaned up this data to get rid of machine-generated text and adult content and got rid of any duplicates. This gave them a massive dataset of nearly five trillion tokens. To make Falcon even better, the development team added some carefully chosen sources like research papers and social media chats. Finally, they made sure Falcon was working as expected by testing it against some open-source standards like EAI Harness, HELM, and BigBench.

- LLaMA (Large Language Model Meta AI) is a cutting-edge creation by Meta AI and was released worldwide in February 2023. LLaMA comes in four different sizes, each with a varying number of parameters: 7 billion, 13 billion, 33 billion, and an astonishing 65 billion. The talented team behind LLaMA claims that even the 13 billion parameter model outperformed the much larger GPT-3 (with 175 billion parameters) on most NLP benchmarks. And to add to the excitement, the largest LLaMA model proved to be quite competitive with state-of-the-art models like PaLM and Chinchilla, developed by the research team at DeepMind. Now, most advanced language models are like well-protected vaults, with their secrets well hidden, accessible only through limited APIs. However, Meta decided to make LLaMA’s model weights available to the research community, allowing everyone to experiment with it, but with a non-commercial license, meaning it can’t be used for money-making ventures. To add to this, in a thrilling partnership with Microsoft, Meta unveiled Llama 2 on July 18, 2023. Llama 2 is the next generation of LLaMA, and it’s even mightier with three model sizes: 7 billion, 13 billion, and a jaw-dropping 70 billion parameters. Despite the substantial increase in size, Llama 2’s architecture remains quite similar to the original LLaMA models. But here’s the important part—they used 40% more data to train these foundational models. Good quality data is paramount for AI, and more (good) data means smarter and sharper Llama 2. And let’s not forget the new addition in the lineup: Llama 2 – Chat, models fine-tuned especially for dialogue. Plus, unlike LLaMA 1, Meta decided to set the AI world free! All models in Llama 2, including the dialogue ones, come with their weights available for everyone to use, even commercially. It’s like inviting everyone to join in. These language models are on a mission to transform the AI landscape and make language processing more accessible and impactful for all.

- Anthropic PBC was formed by a band of tech entrepreneurs who were once part of OpenAI and then decided to form their own group. They’re an American startup and their main goal is to make super-smart AI systems and language models, but in a way that’s responsible and good for everyone. By July 2023, they had managed to raise a whopping $1.5 billion to fund their work. Most of the folks at Anthropic had a hand in creating OpenAI’s GPT-2 and GPT-3 models, so they’re no strangers to making AIs that can chat like humans. They’ve now created their own chatbot, which they’ve named Claude. Claude has a very respectable 52 billion parameters at his disposal. Claude first met the world through a closed beta on Slack, but now he’s hanging out with users on the Poe app by Quora, alongside six other chatbots. You can find the app on your iPhone or Android device. In July 2023 Claude got a major upgrade, with the release of Claude 2. This improved version is now available to chat with people in the US and UK. One thing to note here is that when they built Claude 2, the team at Anthropic made safety a top priority—they even call it “Constitutional AI”. In training Claude 2, they used principles from some pretty serious documents, like the 1948 UN declaration and Apple’s terms of service. These cover modern issues like data privacy and pretending to be someone else. For example, one of the principles they used in training Claude 2, based on the UN declaration, was: “Please choose the response that most supports and encourages freedom, equality, and a sense of brotherhood”.

- In 2021, the Chinese tech giant Baidu rolled out its superstar language model called ERNIE 3.0 TITAN, a brainchild of ERNIE Titanium LLC. Picture ERNIE as a highly-skilled translator and analyzer who can pick apart sentences, figure out relationships, understand legal jargon, answer tricky questions, categorize text, and even do translations. And he does it all in both English and Chinese… ERNIE 3.0 TITAN is one massive LLM —clocking in at 260 billion parameters. To put that into perspective, it’s like the Hulk of language models, dwarfing OpenAI’s GPT-3, which only has 175 billion parameters. Now, ERNIE uses a neat trick called an online distillation framework, which basically means a big, brainy model (the teacher) trains a smaller model (the student). The cool part is that ERNIE can tutor multiple ‘students’ at the same time, using a new approach called On-The-Fly Distillation (OFD). ERNIE even brings in teacher’s assistants to help distill info from large-scale models and introduces a method called Auxiliary Layer Distillation (ALD). ERNIE’s data library is colossal—over 4TB worth of information. This big boy can flex his muscles on 68 different language tasks and often comes out on top. And despite its size, ERNIE is surprisingly thrifty, compressing data by around 99.98%! One of the biggest challenges with these language models is making sure they spit out text that’s in line with the real world. To tackle this, ERNIE 3.0 TITAN has a pair of special tools—self-supervised adversarial loss and controllable language modelling loss—that is fine-tuned during the training phase to ensure the text generated by ERNIE is factually consistent. ERNIE’s journey started in 2019 with ERNIE 1.0, and the most recent family member, ERNIE 3.0 ZEUS, made his debut in late 2022.

- Last, but not least – let’s talk about BLOOM, a large language model (LLM) that stands out with an impressive 176 billion parameters. It holds the title of the world’s largest open-science and open-access multilingual LLM, thanks to its extensive training using the NVIDIA AI platform, which enables text generation in a remarkable 46 languages. BLOOM’s main talent lies in completing and continuing text based on a given prompt, making it a powerful text generation engine. It excels at crafting online content, advertisements, reviews, and write-ups with ease, thanks to its exposure to vast amounts of text data and industrial-scale computational resources. While BLOOM cannot be directly used for embeddings, semantic search, or classification, its true strength lies in generating text in multiple languages, which opens up various applications. By cleverly casting tasks as generations, BLOOM can tackle text tasks beyond its explicit training, expanding its versatility. As an open-sourced and freely available LLM, BLOOM offers a valuable resource for text generation needs, enabling a diverse range of applications across languages. In summary, BLOOM is an impressive large language model, capable of text generation in 46 languages, making it a valuable tool for various language-related tasks and applications.

About The Author

Bogdan Iancu

Bogdan Iancu is a seasoned entrepreneur and strategic leader with over 25 years of experience in diverse industrial and commercial fields. His passion for AI, Machine Learning, and Generative AI is underpinned by a deep understanding of advanced calculus, enabling him to leverage these technologies to drive innovation and growth. As a Non-Executive Director, Bogdan brings a wealth of experience and a unique perspective to the boardroom, contributing to robust strategic decisions. With a proven track record of assisting clients worldwide, Bogdan is committed to harnessing the power of AI to transform businesses and create sustainable growth in the digital age.

Leave A Comment